Here are ways you can write Web Scrapers that cannot be blocked. Well, in most cases.

For this tutorial, I will show you some code as we go along, and I will be using Python as the language and the requests library as the library of choice to keep everything simple.

User-Agent String

The first thing to get right is the User-Agent string. It will tell the web server to treat you like a regular web browser. User-agent strings were initially designed to allow web servers to serve content based on the environment that the user was in, like the OS and the browser version. But it can just as quickly be used to block non-frequent requests. Here is a bunch of user agent string that you can get publicly.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.amazon.com/s?k=shampoo&ref=nb_sb_noss_1'

response=requests.get(url,headers=headers)In many cases, this is enough, but in some cases, you will need to rotate them.

A simple user-agent string rotator in Python (Refer to the article by this name)

Setting the request headers

Web browsers typically send all sorts of headers as standard practice. An intelligent algorithm will see the absence of this and might reject the connection. One of the ways to, therefore, “BE” a web browser is to send everything a web browser sends.

It is everything my web browser sends when I go to Amazon.com. Look at the Headers tab in the Chrome inspector tool.

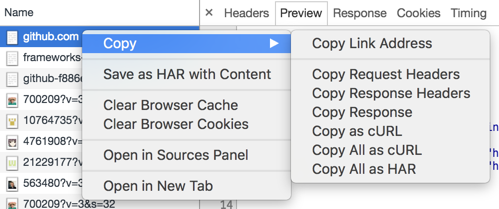

One of the ways to replicate this exactly is to the right click on the request and click on Copy → Copy as CURL.

It will give you all the headers that Chrome sends as a CURL request.

curl 'https://www.amazon.com/' -H 'authority: www.amazon.com' -H 'pragma: no-cache' -H 'cache-control: no-cache' -H 'upgrade-insecure-requests: 1' -H 'user-agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.106 Safari/537.36' -H 'sec-fetch-dest: document' -H 'accept: text/html,application/xhtml xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9' -H 'sec-fetch-site: none' -H 'sec-fetch-mode: navigate' -H 'sec-fetch-user: ?1' -H 'accept-language: en-US,en;q=0.9' -H 'cookie: skin=noskin; session-id=147-3918296-4756121; session-id-time=2082787201l; i18n-prefs=USD; sp-cdn="L5Z9:IN"; ubid-main=135-7172901-4565431; x-wl-uid=1PiQc/ hTOaGN /cmc0wqiK2NLxhvvz HOk6QfdATg6PuxHbLoYJI4cg exSMLVwWKXR1JmWvENk=; session-token=RH1oealGMfoPl73UY763aRBVtXBa3XhDyOHLBvpeAyGeAdmLtsqZGVC/Otaqzbbn1 JGkybIgRyjJ9pKlBM27lKH/nxR7wwxWcLSjpuuuMsx/5z6LIK SnGQIfsUG1ekh84XDiWfUBB6CDEVXNGUi24s0 1woBwVHnkSErK5HhEvZkkcIhW8ERVL3UdUK8KCDnPjcMgkJ03U0bsoB3YxiN/h03U05mvxHh98vVjvPYSVDmh Nxwbrc69YW6N0nznFSFHLSdfmlE=; csm-hit=tb:s-A6T9WH64KMZ4RJ4RKZ43|1581771097931&t:1581771101526&adb:adblk_no; cdn-session=AK-991556fdabde9e3afb9f2bdd35812299; cdn-rid=9732D41758170D2DE324' --compressedYou can use a tool to convert this into Python-compatible code.

Here is the output..

import requests

headers = {

'authority': 'www.amazon.com',

'pragma': 'no-cache',

'cache-control': 'no-cache',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.106 Safari/537.36',

'sec-fetch-dest': 'document',

'accept': 'text/html,application/xhtml xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'sec-fetch-site': 'none',

'sec-fetch-mode': 'navigate',

'sec-fetch-user': '?1',

'accept-language': 'en-US,en;q=0.9',

'cookie': 'skin=noskin; session-id=147-3918296-4756121; session-id-time=2082787201l; i18n-prefs=USD; sp-cdn="L5Z9:IN"; ubid-main=135-7172901-4565431; x-wl-uid=1PiQc/ hTOaGN /cmc0wqiK2NLxhvvz HOk6QfdATg6PuxHbLoYJI4cg exSMLVwWKXR1JmWvENk=; session-token=RH1oealGMfoPl73UY763aRBVtXBa3XhDyOHLBvpeAyGeAdmLtsqZGVC/Otaqzbbn1 JGkybIgRyjJ9pKlBM27lKH/nxR7wwxWcLSjpuuuMsx/5z6LIK SnGQIfsUG1ekh84XDiWfUBB6CDEVXNGUi24s0 1woBwVHnkSErK5HhEvZkkcIhW8ERVL3UdUK8KCDnPjcMgkJ03U0bsoB3YxiN/h03U05mvxHh98vVjvPYSVDmh Nxwbrc69YW6N0nznFSFHLSdfmlE=; csm-hit=tb:s-A6T9WH64KMZ4RJ4RKZ43|1581771097931&t:1581771101526&adb:adblk_no; cdn-session=AK-991556fdabde9e3afb9f2bdd35812299; cdn-rid=9732D41758170D2DE324',

}

response = requests.get('https://www.amazon.com/', headers=headers)Now you got code clever enough to get you past most web servers, but it won’t scale. To thousands of links, then you will find that you will get IP blocked easily by many websites as well. In this scenario, using a rotating proxy service to rotate IPs is almost a must.

Otherwise, you tend to get IP blocked a lot by automatic location, usage, and bot detection algorithms.

Our rotating proxy server Proxies API provides a simple API that can solve all IP Blocking problems instantly.

a) With millions of high speed rotating proxies located all over the world.

b) With our automatic IP rotation.

c) With our automatic User-Agent-String rotation (which simulates requests from different, valid web browsers and web browser versions).

d) With our automatic CAPTCHA solving technology

Hundreds of our customers have successfully solved the headache of IP blocks with a simple API.

A simple API can access the whole thing like the below in any programming language.

You don’t even have to take the pain of loading Puppeteer as we render Javascript behind the scenes, and you can get the data and parse it in any language like Node, Puppeteer, or PHP or using any framework like Scrapy or Nutch. In all these cases, you can call the URL with render support like so.

curl "http://api.proxiesapi.com/?key=API_KEY&render=true&url=https://example.com"We have a running offer of 1000 API calls completely free. Register and get your free API Key here.

The author is the founder of Proxies API the rotating proxies service.